The Mellanox ConnectX5 VPI adapter supports both Ethernet and InfiniBand port modes, which must be configured.

Check Status

# mst status -v

MST modules:

------------

MST PCI module is not loaded

MST PCI configuration module is not loaded

PCI devices:

------------

DEVICE_TYPE MST PCI RDMA NET NUMA

ConnectX4(rev:0) /dev/mst/mt4115_pciconf3 8b:00.0 mlx5_3 1

ConnectX4(rev:0) /dev/mst/mt4115_pciconf2 84:00.0 mlx5_2 1

ConnectX4(rev:0) /dev/mst/mt4115_pciconf1 0c:00.0 mlx5_1 0

ConnectX4(rev:0) /dev/mst/mt4115_pciconf0 05:00.0 mlx5_0 0 1 Start MST

# mst start

Starting MST (Mellanox Software Tools) driver set

Loading MST PCI module - Success

Create devices

Unloading MST PCI module (unused) - Success

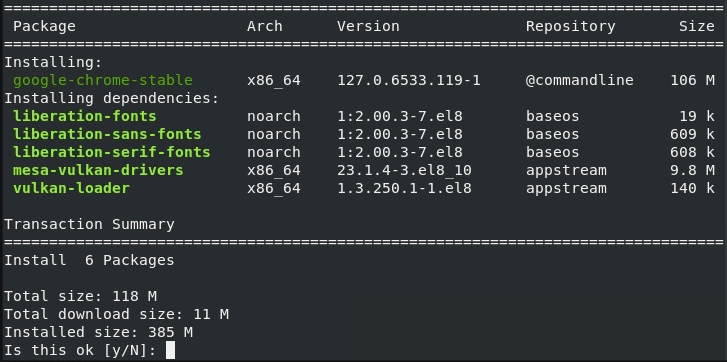

Change the port type to Ethernet (LINK_TYPE = 2)

# mlxconfig -d /dev/mst/mt4115_pciconf2 set LINK_TYPE_P1=2Check that the port type was changed to Ethernet

# ibdev2netdev

mlx5_0 port 1 ==> ens1np0 (Down)

mlx5_1 port 1 ==> enp12s0np0 (Down)

mlx5_2 port 1 ==> enp132s0np0 (Up)

mlx5_3 port 1 ==> enp139s0np0 (Down)

References: