One of the best ways to improve the performance of Microsoft Windows guest is to use paravirtualised devices and drivers for KVM in the guests. This provides close to bare performance (up to 95%). These drivers are provided by the virtio-win RPM packages in the RHEL.

1. The virtio-win packages can be found on RHEL server /usr/share/virtio-win/

# ls -l /usr/share/virtio-win/

drwxr-xr-x. 4 root root 4096 Oct 13 10:24 drivers

drwxr-xr-x. 2 root root 4096 Oct 13 10:24 guest-agent

-rw-r--r--. 1 root root 2949120 May 29 2014 virtio-win-1.7.1_amd64.vfd

-rw-r--r--. 1 root root 149004288 May 29 2014 virtio-win-1.7.1.iso

-rw-r--r--. 1 root root 2949120 May 29 2014 virtio-win-1.7.1_x86.vfd

lrwxrwxrwx. 1 root root 26 Oct 13 10:24 virtio-win_amd64.vfd -> virtio-win-1.7.1_amd64.vfd

lrwxrwxrwx. 1 root root 20 Oct 13 10:24 virtio-win.iso -> virtio-win-1.7.1.iso

lrwxrwxrwx. 1 root root 24 Oct 13 10:24 virtio-win_x86.vfd -> virtio-win-1.7.1_x86.vfd

You may want to add these images to the ISO Library, you can attach the virtio-drivers-*.vfd image to the virtual floppy drier beofre manually installing Windows

2. The rhev-tools-setup.iso image found in the usr/share/rhev-guest-tools-iso

# ls -l /usr/share/rhev-guest-tools-iso

-rw-r--r--. 1 root root 177272 Jul 29 16:30 LICENSES

-rw-r--r--. 1 root root 350181376 Jul 29 16:30 RHEV-toolsSetup_3.4_9.iso

lrwxrwxrwx. 1 root root 57 Oct 13 10:23 rhev-tools-setup.iso -> /usr/share/rhev-guest-tools-iso/RHEV-toolsSetup_3.4_9.iso

-rw-r--r--. 1 root root 924 Jul 29 16:30 SOURCES

3. Upload drivers and tools to the ISO Storage Domain

# rhevm-iso-uploader -u admin@internal list

Please provide the REST API password for the admin@internal oVirt Engine user (CTRL+D to abort):

ISO Storage Domain Name | Datacenter | ISO Domain Status

dmn_ixora_iso_vol | RH_Resource | active

# rhevm-iso-uploader -u admin@internal --iso-domain=dmn_ixora_iso_vol upload /usr/share/virtio-win/virtio-win-1.7.1_x86.vfd /usr/share/rhev-guest-tools-iso/rhev-tools-setup.iso

Please provide the REST API password for the admin@internal oVirt Engine user (CTRL+D to abort):

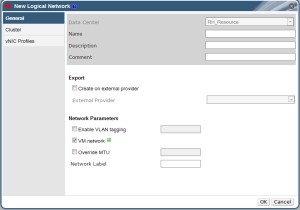

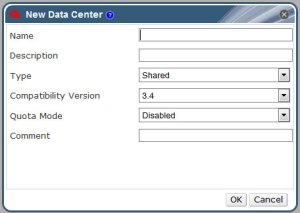

4. Create the new VM using the RHEV Manager

Remember to click the “Run Once”

Under the “Boot Option”,

– Attached the virtio-win-1.7.1_amd.vfd to the floppy

– Attached the MS Windows 7 ISO to the CD

5. Update Ethernet Drivers, PCI Simple Communication Controller by attaching the RH

6. Once you have booted to Windows 7, go to Device Manager and run an update for the missing Ethernet, storage controller

You should see

– Red Hat VirtIO SCSI controller,

– Red Hat VirtIO SCSI pass-through controller

– Red Hat VirtIO Ethernet Adapter