Prerequisites:

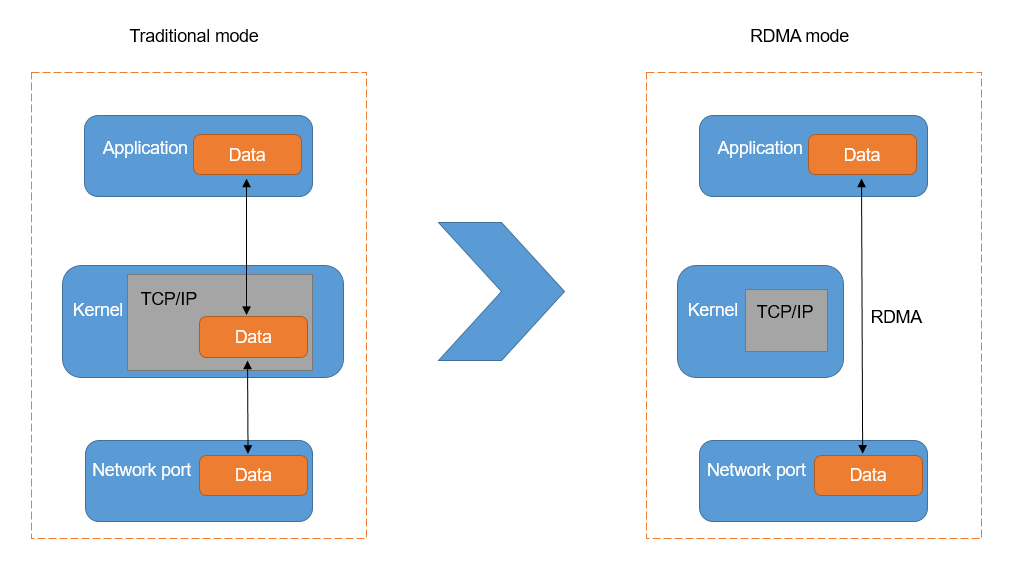

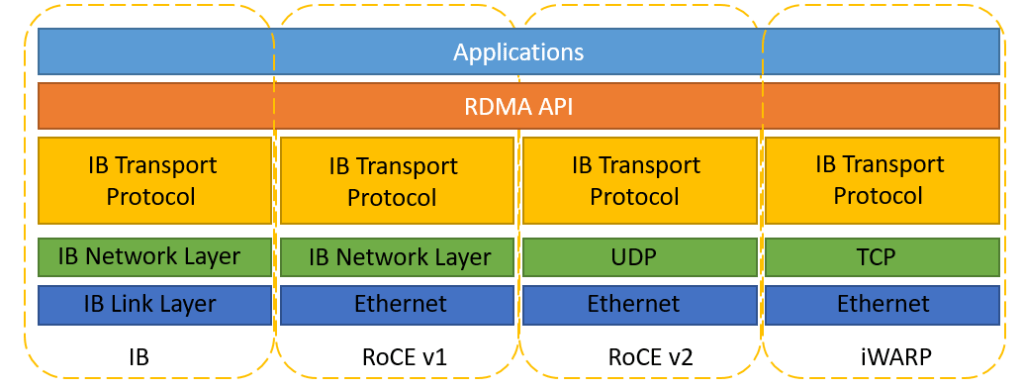

Do read Basic Understanding RoCE and Infiniband

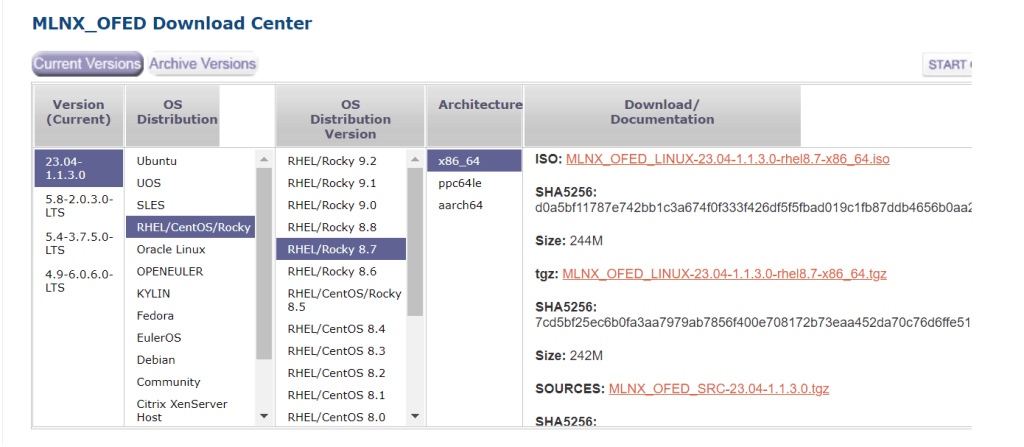

Step 1: Install Mellanox Package

First and Foremost, you have to install Mellanox Package which you can download at https://developer.nvidia.com/networking/ethernet-software. You may want to consider installing using the traditional method or Ansible Method (Installing Mellanox OFED (mlnx_ofed) packages using Ansible)

Step 2: Load the Drivers

Activate two kernel modules that are needed for rdma and RoCE exchanges by using the command

# modprobe rdma_cm ib_umadStep 3: Verify the drivers are loaded

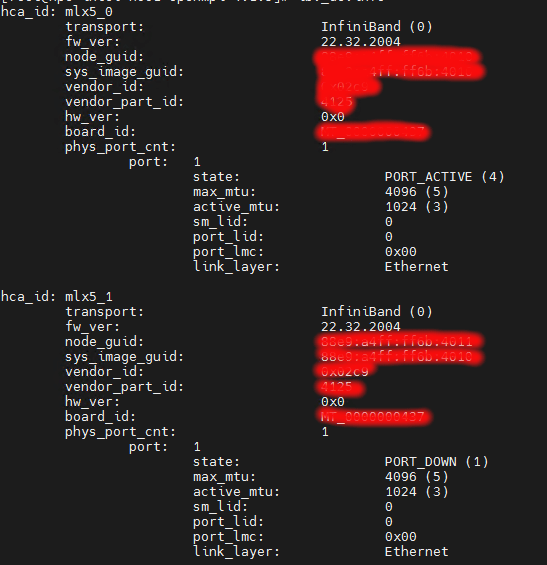

# ibv_devinfo

Step 4: Set the RoCE to version 2

Set the version of the RoCE protocol to v2 by issuing the command below.

-dis the device,-pis the port-mthe version of RoCE:

[root@node1]# cma_roce_mode -d mlx5_0 -p 1 -m 2

RoCE v2

Step 5: Check which RoCE devices are enabled on the Ethernet

[root@node-1]# ibdev2netdev

mlx5_0 port 1 ==> ens1f0 (Up)

mlx5_1 port 1 ==> ens1f1 (Down)

Refererences: