Join the NVIDIA Jetson team for the latest episode of our AMA-style live stream, Jetson AI Labs.

Tools to Show your System Configuration

Tool 1: Display Information about CPU Architecture

[user1@node1 ~]$ lscpu Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian CPU(s): 32 On-line CPU(s) list: 0-31 Thread(s) per core: 2 Core(s) per socket: 8 Socket(s): 2 NUMA node(s): 2 Vendor ID: GenuineIntel CPU family: 6 Model: 85 Model name: Intel(R) Xeon(R) Gold 6134 CPU @ 3.20GHz Stepping: 4 CPU MHz: 3200.000 BogoMIPS: 6400.00 Virtualization: VT-x L1d cache: 32K L1i cache: 32K L2 cache: 1024K L3 cache: 25344K NUMA node0 CPU(s): 0-7,16-23 NUMA node1 CPU(s): 8-15,24-31 ..... .....

Tool 2: List all PCI devices

[user1@node1 ~]# lspci -t -vv -+-[0000:d7]-+-00.0-[d8]-- | +-01.0-[d9]-- | +-02.0-[da]-- | +-03.0-[db]-- | +-05.0 Intel Corporation Device 2034 | +-05.2 Intel Corporation Sky Lake-E RAS Configuration Registers | +-05.4 Intel Corporation Device 2036 | +-0e.0 Intel Corporation Device 2058 | +-0e.1 Intel Corporation Device 2059 | +-0f.0 Intel Corporation Device 2058 | +-0f.1 Intel Corporation Device 2059 | +-10.0 Intel Corporation Device 2058 | +-10.1 Intel Corporation Device 2059 | +-12.0 Intel Corporation Sky Lake-E M3KTI Registers | +-12.1 Intel Corporation Sky Lake-E M3KTI Registers | +-12.2 Intel Corporation Sky Lake-E M3KTI Registers | +-12.4 Intel Corporation Sky Lake-E M3KTI Registers | +-12.5 Intel Corporation Sky Lake-E M3KTI Registers | +-15.0 Intel Corporation Sky Lake-E M2PCI Registers | +-16.0 Intel Corporation Sky Lake-E M2PCI Registers | +-16.4 Intel Corporation Sky Lake-E M2PCI Registers | \-17.0 Intel Corporation Sky Lake-E M2PCI Registers ..... .....

Tool 3: List all PCI devices

[user@node1 ~]# lsblk sda 8:0 0 1.1T 0 disk ├─sda1 8:1 0 200M 0 part /boot/efi ├─sda2 8:2 0 1G 0 part /boot └─sda3 8:3 0 1.1T 0 part ├─centos-root 253:0 0 50G 0 lvm / ├─centos-swap 253:1 0 4G 0 lvm [SWAP] └─centos-home 253:2 0 1T 0 lvm

Tool 4: See flags kernel booted with

[user@node1 ~] BOOT_IMAGE=/vmlinuz-3.10.0-693.el7.x86_64 root=/dev/mapper/centos-root ro crashkernel=auto rd.lvm.lv=centos/root rd.lvm.lv=centos/swap rhgb quiet LANG=en_US.UTF-8

Tool 5: Display available network interfaces

[root@hpc-gekko1 ~]# ifconfig -a eno1: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 ether XX:XX:XX:XX:XX:XX txqueuelen 1000 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 ..... ..... .....

Tool 6 Using dmidecode to find hardware information

See Using dmidecode to find hardware information

Benchmarking Tools for Memory Bandwidth

What is Bandwidth

Bandwidth, is an artificial benchmark primarily for measuring memory bandwidth on x86 and x86_64 based computers, useful for identifying weaknesses in a computer’s memory subsystem, in the bus architecture, in the cache architecture and in the processor itself.

bandwidth also tests some libc functions and, under GNU/Linux, it attempts to test framebuffer memory access speed if the framebuffer device is available.

Prerequisites:

NASM, GNU Compiler Suite

Compiling NASM

Bandwidth-1.94 requires the latest version of NASM.

% tar -xvf nasm-2.15.05.tar.gz % cd nasm-2.15.05 % ./configure % make % make install

You should have nasm binary. Make sure you update $PATH to reflect the path of the nasm binary

Compiling Bandwidth-1.94

% tar -zxvf bandwidth-1.9.4.tar.gz % cd bandwidth-1.9.4 % make bandwidth64

You should have bandwidth64 binary

Run the Test

% ./bandwidth64 Sequential read (64-bit), size = 256 B, loops = 1132462080, 55292.9 MB/s Sequential read (64-bit), size = 384 B, loops = 765632322, 56075.0 MB/s Sequential read (64-bit), size = 512 B, loops = 573833216, 56028.0 MB/s Sequential read (64-bit), size = 640 B, loops = 457595948, 55857.6 MB/s Sequential read (64-bit), size = 768 B, loops = 382990923, 56092.5 MB/s Sequential read (64-bit), size = 896 B, loops = 326929770, 55865.7 MB/s Sequential read (64-bit), size = 1024 B, loops = 285671424, 55789.1 MB/s Sequential read (64-bit), size = 1280 B, loops = 229320072, 55973.6 MB/s Sequential read (64-bit), size = 2 kB, loops = 143425536, 56016.5 MB/s Sequential read (64-bit), size = 3 kB, loops = 95550030, 55977.6 MB/s Sequential read (64-bit), size = 4 kB, loops = 71729152, 56036.7 MB/s Sequential read (64-bit), size = 6 kB, loops = 47510700, 55667.7 MB/s Sequential read (64-bit), size = 8 kB, loops = 35856384, 56020.1 MB/s Sequential read (64-bit), size = 12 kB, loops = 23738967, 55631.2 MB/s Sequential read (64-bit), size = 16 kB, loops = 17666048, 55199.2 MB/s Sequential read (64-bit), size = 20 kB, loops = 14139216, 55228.2 MB/s Sequential read (64-bit), size = 24 kB, loops = 11771760, 55178.0 MB/s Sequential read (64-bit), size = 28 kB, loops = 10097100, 55212.2 MB/s Sequential read (64-bit), size = 32 kB, loops = 8679424, 54246.3 MB/s Sequential read (64-bit), size = 34 kB, loops = 7160732, 47543.7 MB/s Sequential read (64-bit), size = 36 kB, loops = 6404580, 45029.4 MB/s Sequential read (64-bit), size = 40 kB, loops = 5729724, 44762.0 MB/s Sequential read (64-bit), size = 48 kB, loops = 4782960, 44837.4 MB/s Sequential read (64-bit), size = 64 kB, loops = 3603456, 45042.9 MB/s Sequential read (64-bit), size = 128 kB, loops = 1806848, 45168.2 MB/s Sequential read (64-bit), size = 192 kB, loops = 1204753, 45175.8 MB/s Sequential read (64-bit), size = 256 kB, loops = 897792, 44882.4 MB/s Sequential read (64-bit), size = 320 kB, loops = 711144, 44435.3 MB/s Sequential read (64-bit), size = 384 kB, loops = 590070, 44254.7 MB/s Sequential read (64-bit), size = 512 kB, loops = 440064, 43995.8 MB/s Sequential read (64-bit), size = 768 kB, loops = 285005, 42741.0 MB/s Sequential read (64-bit), size = 1024 kB, loops = 170048, 34006.4 MB/s Sequential read (64-bit), size = 1280 kB, loops = 120615, 30152.0 MB/s Sequential read (64-bit), size = 1536 kB, loops = 91434, 27427.4 MB/s Sequential read (64-bit), size = 1792 kB, loops = 77688, 27180.4 MB/s Sequential read (64-bit), size = 2048 kB, loops = 64320, 25722.9 MB/s Sequential read (64-bit), size = 2304 kB, loops = 56252, 25313.3 MB/s Sequential read (64-bit), size = 2560 kB, loops = 49550, 24772.9 MB/s Sequential read (64-bit), size = 2816 kB, loops = 47334, 26023.8 MB/s Sequential read (64-bit), size = 3072 kB, loops = 41916, 25142.8 MB/s Sequential read (64-bit), size = 3328 kB, loops = 37525, 24388.1 MB/s Sequential read (64-bit), size = 3584 kB, loops = 35982, 25184.6 MB/s Sequential read (64-bit), size = 4096 kB, loops = 31824, 25457.4 MB/s Sequential read (64-bit), size = 5120 kB, loops = 25128, 25116.7 MB/s Sequential read (64-bit), size = 6144 kB, loops = 22460, 26948.8 MB/s Sequential read (64-bit), size = 7168 kB, loops = 18081, 25309.1 MB/s Sequential read (64-bit), size = 8192 kB, loops = 14952, 23921.5 MB/s Sequential read (64-bit), size = 9216 kB, loops = 13692, 24642.6 MB/s Sequential read (64-bit), size = 10240 kB, loops = 12144, 24280.2 MB/s Sequential read (64-bit), size = 12288 kB, loops = 9465, 22713.4 MB/s Sequential read (64-bit), size = 14336 kB, loops = 7628, 21357.8 MB/s Sequential read (64-bit), size = 15360 kB, loops = 6580, 19735.0 MB/s Sequential read (64-bit), size = 16384 kB, loops = 6068, 19413.2 MB/s Sequential read (64-bit), size = 20480 kB, loops = 3636, 14541.5 MB/s Sequential read (64-bit), size = 21504 kB, loops = 3741, 15711.6 MB/s Sequential read (64-bit), size = 32768 kB, loops = 1266, 8102.1 MB/s Sequential read (64-bit), size = 49152 kB, loops = 900, 8640.0 MB/s Sequential read (64-bit), size = 65536 kB, loops = 566, 7238.3 MB/s Sequential read (64-bit), size = 73728 kB, loops = 609, 8765.8 MB/s Sequential read (64-bit), size = 98304 kB, loops = 455, 8726.8 MB/s Sequential read (64-bit), size = 131072 kB, loops = 331, 8461.2 MB/s

There is an interesting collection of commentaries at https://zsmith.co/bandwidth.php

Installing Julia Programming Language on CentOS 7

Julia is a high-level, high-performance dynamic language for technical computing. The main homepage for Julia can be found at julialang.org.

Step 1: The latest Julia Programming language binary can be found at downloaded at

% wget https://julialang-s3.julialang.org/bin/linux/x64/1.5/julia-1.5.3-linux-x86_64.tar.gzStep 2: Extract the Julia Tar GZ

% tar -zxvf julia-1.5.3-linux-x86_64.tar.gzStep 3: Move the unpacked folder to /usr/local

% mv julia-1.5.3 /usr/localTry Julia

Compiling Quantum ESPRESSO-6.7.0 with BEEF and Intel MPI 2018 on CentOS 7

Step 1: Download Quantum ESPRESSO 6.5.0 from Quantum ESPRESSO v. 6.7

% tar -zxvf q-e-qe-6.7.0.tar.gzStep 2: Remember to source the Intel Compilers and indicate MKLROOT in your .bashrc

source /usr/local/intel/2018u3/mkl/bin/mklvars.sh intel64

source /usr/local/intel/2018u3/parallel_studio_xe_2018/bin/psxevars.sh intel64

source /usr/local/intel/2018u3/compilers_and_libraries/linux/bin/compilervars.sh intel64

source /usr/local/intel/2018u3/impi/2018.3.222/bin64/mpivars.sh intel64Step 3a: Install libbeef

Step 3b: Make a file call setup.sh and copy the contents inside

export F90=mpiifort

export F77=mpiifort

export MPIF90=mpiifort

export CC=mpiicc

export CPP="icc -E"

export CFLAGS=$FCFLAGS

export AR=xiar

#export LDFLAGS="-L/usr/local/intel/2018u3/mkl/lib/intel64"

#export CPPLAGS="-I/usr/local/intel/2018u3/mkl/include/fftw"

export BEEF_LIBS="-L/usr/local/libbeef-0.1.3/lib -lbeef"

export BLAS_LIBS="-lmkl_intel_lp64 -lmkl_intel_thread -lmkl_core"

export LAPACK_LIBS="-lmkl_blacs_intelmpi_lp64"

export SCALAPACK_LIBS="-lmkl_scalapack_lp64 -lmkl_blacs_intelmpi_lp64"

#export FFT_LIBS="-lmkl_intel_lp64 -lmkl_sequential -lmkl_core -lmkl_scalapack_lp64"

./configure --enable-parallel --enable-openmp --enable-shared --with-scalapack=intel --prefix=/usr/local/espresso-6.7.0 | tee Configure.out% ./setup.sh% make all

% make install(I prefer make all without the “make all -j 16” so I can see all the errors and trapped seriously)

If successful, you will see in your bin folder

Using qperf to measure network bandwidth and latency

qperf is a network bandwidth and latency measurement tool which works over many transports including TCP/IP, RDMA, UDP and SCTP

Step 1: Installing qperf on both Server and Client

Server> # yum install qperf

Client> # yum install qperfStep 2: Open Up the Firewall on the Server

# firewall-cmd --permanent --add-port=19765-19766/tcp

# firewall-cmd --reloadThe Server listens at TCP Port 19765 by default. Do note that once qperf makes a connection, it will create a control port and data port , the default data port is 19765 but we also need to enable a data port to run test which can be 19766.

Step 3a: Have the Server listen (as qperf server)

Server> $ qperfStep 4: Connect to qperf Server with qperf Client and measure bandwidth

Client > $ qperf -ip 19766 -t 60 qperf_server_ip_address tcp_bw

tcp_bw:

bw = 2.52 GB/sec

Step 5: Connect to qperf Server with qperf Client and measure latency

qperf -vvs qperf_server_ip_address tcp_lat

tcp_lat:

latency = 20.7 us

msg_rate = 48.1 K/sec

loc_send_bytes = 48.2 KB

loc_recv_bytes = 48.2 KB

loc_send_msgs = 48,196

loc_recv_msgs = 48,196

rem_send_bytes = 48.2 KB

rem_recv_bytes = 48.2 KB

rem_send_msgs = 48,197

rem_recv_msgs = 48,197References and material Taken from:

How to check NFS Client connection from NFS Server

From the NFS Server, do put in the command below. The NFS Server is 192.168.0.1 and the NFS Server port is 2049

# netstat -an | grep 192.168.0.1:2049

tcp 0 0 192.168.0.1:2049 192.168.0.10:965 ESTABLISHED

(192.168.0.10 is the client)

Red Hat Summit 2021

To Register, See https://www.redhat.com/en/summit

How to configure NFS on CentOS 7

Step 1: Do a Yum Install

# yum install nfs-utils rpcbind

Step 2: Enable the Service at Boot Time

# systemctl enable nfs-server # systemctl enable rpcbind # systemctl enable nfs-lock (it does not need to be enabled since rpc-statd.service is static.) # systemctl enable nfs-idmap (it does not need to be enabled since nfs-idmapd.service is static.)

Step 3: Start the Services

# systemctl start rpcbind # systemctl start nfs-server # systemctl start nfs-lock # systemctl start nfs-idmap

Step 4: Confirm the status of NFS

# systemctl status nfs

Step 5: Create a mount point

# mkdir /shared-data

Step 6: Exports the Share

# vim /etc/exports /shared-data 192.168.0.0/16(rw,no_root_squash)

Step 7: Export the Share

# exportfs -rv

Step 8: Restart the NFS Services

# systemctl restart nfs-server

Step 9: Configure the Firewall

# firewall-cmd --add-service=nfs --zone=internal --permanent # firewall-cmd --add-service=mountd --zone=internal --permanent # firewall-cmd --add-service=rpc-bind --zone=internal --permanent

References:

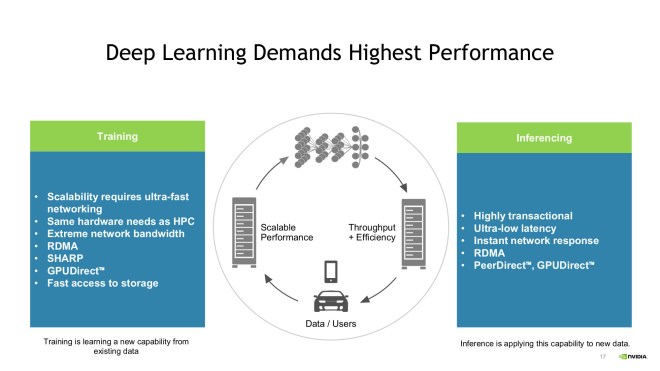

Performance Required for Deep Learning

There is this question that I wanted to find out about deep learning. What are essential System, Network, Protocol that will speed up the Training and/or Inferencing. There may not be necessary to employ the same level of requirements from Training to Inferencing and Vice Versa. I have received this information during a Nvidia Presentation

Training:

- Scalability requires ultra-fast networking

- Same hardware needs as HPC

- Extreme network bandwidth

- RDMA

- SHARP (Mellanox Scalable Hierarchical Aggregation and Reduction Protocol)

- GPUDirect (https://developer.nvidia.com/gpudirect)

- Fast Access Storage

Influencing

- Highly Transactional

- Ultra-low Latency

- Instant Network Response

- RDMA

- PeerDirect, GPUDirect