The NGC catalog is a hub of GPU-optimized AI, high-performance computing (HPC), and data analytics software that simplifies and accelerates end-to-end workflows

Nvidia

GTC 2021 Keynote with NVIDIA CEO Jensen Huang

NVIDIA CEO Jensen announced NVIDIA’s first data center CPU, Grace, named after Grace Hopper, a U.S. Navy rear admiral and computer programming pioneer. Grace is a highly specialized processor targeting largest data intensive HPC and AI applications as the training of next-generation natural-language processing models that have more than one trillion parameters.

Further accelerating the infrastructure upon which hyperscale data centers, workstations, and supercomputers are built, Huang announced the NVIDIA BlueField-3 DPU.

The next-generation data processing unit will deliver the most powerful software-defined networking, storage and cybersecurity acceleration capabilities.

Where BlueField-2 offloaded the equivalent of 30 CPU cores, it would take 300 CPU cores to secure, offload, and accelerate network traffic at 400 Gbps as BlueField-3— a 10x leap in performance, Huang explained.

Jetson AI Labs – E03 – March 25, 2021

Using multiple GPUs for Machine Learning

Taken from Sharcnet HPC

The Video will consider two cases – when the GPUs are inside a single node, and a multi-node case.

Jetson AI Labs – E02 – February 25, 2021

Join the NVIDIA Jetson team for the latest episode of our AMA-style live stream, Jetson AI Labs.

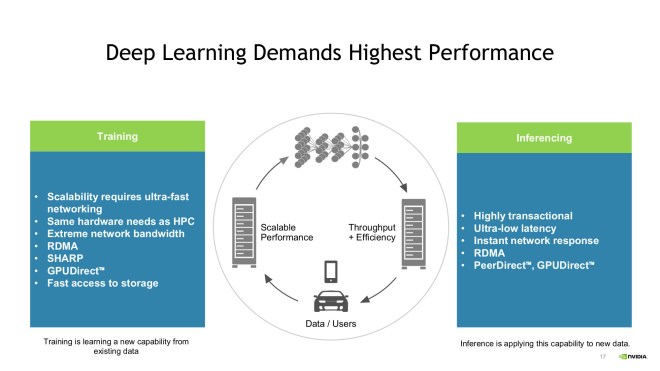

Performance Required for Deep Learning

There is this question that I wanted to find out about deep learning. What are essential System, Network, Protocol that will speed up the Training and/or Inferencing. There may not be necessary to employ the same level of requirements from Training to Inferencing and Vice Versa. I have received this information during a Nvidia Presentation

Training:

- Scalability requires ultra-fast networking

- Same hardware needs as HPC

- Extreme network bandwidth

- RDMA

- SHARP (Mellanox Scalable Hierarchical Aggregation and Reduction Protocol)

- GPUDirect (https://developer.nvidia.com/gpudirect)

- Fast Access Storage

Influencing

- Highly Transactional

- Ultra-low Latency

- Instant Network Response

- RDMA

- PeerDirect, GPUDirect

Virtual GPU version 11

Building Robotics Applications Using NVIDIA Isaac SDK

Programming GPUs with Fortran

From Sharcnet HPC

GPUs with NVIDIA CUDA architecture are usually programmed using the C language, but NVIDIA also provides a method of programming GPUS with Fortran.

Implementing Real-time Vision AI Apps Using NVIDIA DeepStream SDK