For more information, do register at https://www.ddn.com/company/events/user-group-sc/

Month: November 2020

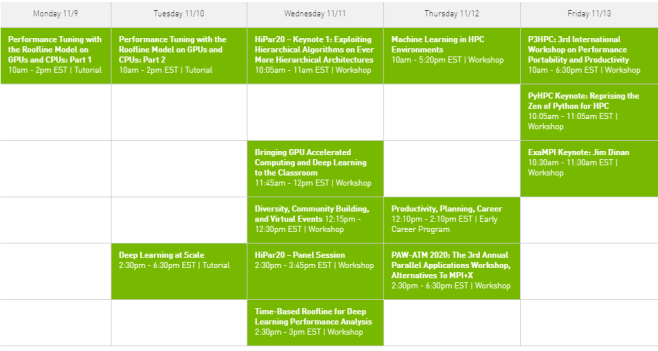

NVIDIA at SC20

Compiling and Using PDSH

What is PDSH?

Pdsh is a multithreaded remote shell client which executes commands on multiple remote hosts in parallel. Pdsh can use several different remote shell services, including standard “rsh”, Kerberos IV, and ssh. For more information, do look at https://github.com/chaos/pdsh

Compilating PDSH

% ./configure CC=/usr/local/intel/2019u5/bin/icc --enable-static-modules --without-rsh --with-ssh --without-ssh-connect-timeout-option --prefix=/usr/local/pdsh-2.33 % make % make install

Specifying a list of hosts

% pdsh -w ^/home/user1/pdsh/hosts uptime

* pdsh take the hostnames from the listed after -w ^ which is /home/users/tmp/hosts

node1: 16:52:59 up 527 days, 3:36, 0 users, load average: 39.46, 38.98, 37.59 node2: 16:53:00 up 30 days, 6:53, 0 users, load average: 44.54, 42.87, 42.20 node3: 16:53:00 up 105 days, 6:40, 0 users, load average: 0.00, 0.01, 0.05 node4: 16:53:00 up 147 days, 9 min, 0 users, load average: 116.68, 111.40, 106.65 node5: 16:53:00 up 548 days, 6:05, 0 users, load average: 32.01, 32.02, 32.05 node6: 16:53:00 up 413 days, 5:19, 0 users, load average: 21.68, 20.74, 20.43 node7: 16:53:00 up 548 days, 6:04, 0 users, load average: 32.00, 32.01, 32.05 node8: 16:53:00 up 23 days, 6:08, 0 users, load average: 32.06, 32.05, 32.05

Using Intel Cluster Checker (Part 3)

Framework Definition (FWD) Selection and Definition

you wish to select a framework definitions. You can do it by using the command

% clck-analyze -X list

Framework definition file path: /usr/local/intel/cc2019/clck/2019.10/etc/fwd/ Framework definition list: avx512_performance_ratios_priv avx512_performance_ratios_user basic_internode_connectivity basic_shells benchmarks bios_checker clock cluster cpu_admin cpu_base cpu_intel64 cpu_user dapl_fabric_providers_present dgemm_cpu_performance environment_variables_uniformity ethernet exclude_hpl file_system_uniformity hardware health health_admin health_base health_extended_user hpcg_cluster hpcg_single hpl_cluster_performance hyper_threading imb_allgather imb_allgatherv imb_allreduce imb_alltoall imb_barrier imb_bcast imb_benchmarks_blocking_collectives imb_benchmarks_non_blocking_collectives imb_gather imb_gatherv imb_iallgather imb_iallgatherv imb_iallreduce imb_ialltoall imb_ialltoallv imb_ibarrier imb_ibcast imb_igather imb_igatherv imb_ireduce imb_ireduce_scatter imb_iscatter imb_iscatterv imb_pingping imb_pingpong_fabric_performance imb_reduce imb_reduce_scatter imb_reduce_scatter_block imb_scatter imb_scatterv infiniband_admin infiniband_base infiniband_user intel_dc_persistent_memory_capabilities_priv intel_dc_persistent_memory_dimm_placement_priv intel_dc_persistent_memory_events_priv intel_dc_persistent_memory_firmware_priv intel_dc_persistent_memory_kernel_support intel_dc_persistent_memory_mode_uniformity_priv intel_dc_persistent_memory_namespaces_priv intel_dc_persistent_memory_priv intel_dc_persistent_memory_tools_priv intel_hpc_platform_base_compat-hpc-2018.0 intel_hpc_platform_base_core-intel-runtime-2018.0 intel_hpc_platform_base_high-performance-fabric-2018.0 intel_hpc_platform_base_hpc-cluster-2018.0 intel_hpc_platform_base_sdvis-cluster-2018.0 intel_hpc_platform_base_sdvis-core-2018.0 intel_hpc_platform_base_sdvis-single-node-2018.0 intel_hpc_platform_compat-hpc-2018.0 intel_hpc_platform_compliance_tcl_version intel_hpc_platform_core-2018.0 intel_hpc_platform_core-intel-runtime-2018.0 intel_hpc_platform_cpu_sdvis-single-node-2018.0 intel_hpc_platform_firmware_high-performance-fabric-2018.0 intel_hpc_platform_high-performance-fabric-2018.0 intel_hpc_platform_hpc-cluster-2018.0 intel_hpc_platform_kernel_version_core-2018.0 intel_hpc_platform_libfabric_high-performance-fabric-2018.0 intel_hpc_platform_libraries_core-intel-runtime-2018.0 intel_hpc_platform_libraries_sdvis-cluster-2018.0 intel_hpc_platform_libraries_sdvis-core-2018.0 intel_hpc_platform_libraries_second-gen-xeon-sp-2019.0 intel_hpc_platform_linux_based_tools_present_core-intel-runtime-2018.0 intel_hpc_platform_memory_sdvis-cluster-2018.0 intel_hpc_platform_memory_sdvis-single-node-2018.0 intel_hpc_platform_minimum_memory_requirements_compat-hpc-2018.0 intel_hpc_platform_minimum_storage intel_hpc_platform_minimum_storage_sdvis-cluster-2018.0 intel_hpc_platform_minimum_storage_sdvis-single-node-2018.0 intel_hpc_platform_mount intel_hpc_platform_perl_core-intel-runtime-2018.0 intel_hpc_platform_rdma_high-performance-fabric-2018.0 intel_hpc_platform_sdvis-cluster-2018.0 intel_hpc_platform_sdvis-core-2018.0 intel_hpc_platform_sdvis-single-node-2018.0 intel_hpc_platform_second-gen-xeon-sp-2019.0 intel_hpc_platform_subnet_management_high-performance-fabric-2018.0 intel_hpc_platform_version_compat-hpc-2018.0 intel_hpc_platform_version_core-2018.0 intel_hpc_platform_version_core-intel-runtime-2018.0 intel_hpc_platform_version_high-performance-fabric-2018.0 intel_hpc_platform_version_hpc-cluster-2018.0 intel_hpc_platform_version_sdvis-cluster-2018.0 intel_hpc_platform_version_sdvis-core-2018.0 intel_hpc_platform_version_sdvis-single-node-2018.0 intel_hpc_platform_version_second-gen-xeon-sp-2019.0 iozone_disk_bandwidth_performance kernel_parameter_preferred kernel_parameter_uniformity kernel_version_uniformity local_disk_storage lsb_libraries lshw_disks lshw_hardware_uniformity memory_uniformity mpi mpi_bios mpi_environment mpi_ethernet mpi_libfabric mpi_local_functionality mpi_multinode_functionality mpi_prereq_admin mpi_prereq_user network_time_uniformity node_process_status opa_admin opa_base opa_user osu_allgather osu_allgatherv osu_allreduce osu_alltoall osu_alltoallv osu_barrier osu_bcast osu_benchmarks_blocking_collectives osu_benchmarks_non_blocking_collectives osu_benchmarks_point_to_point osu_bibw osu_bw osu_gather osu_gatherv osu_iallgather osu_iallgatherv osu_iallreduce osu_ialltoall osu_ialltoallv osu_ialltoallw osu_ibarrier osu_ibcast osu_igather osu_igatherv osu_ireduce osu_iscatter osu_iscatterv osu_latency osu_mbw_mr osu_reduce osu_reduce_scatter osu_scatter osu_scatterv perl_functionality precision_time_protocol privileged_user python_functionality rpm_snapshot rpm_uniformity second-gen-xeon-sp second-gen-xeon-sp_parallel_studio_xe_runtimes_2019.0 second-gen-xeon-sp_priv second-gen-xeon-sp_user select_solutions_redhat_openshift_base select_solutions_redhat_openshift_plus select_solutions_sim_mod_benchmarks_base_2018.0 select_solutions_sim_mod_benchmarks_plus_2018.0 select_solutions_sim_mod_benchmarks_plus_second_gen_xeon_sp select_solutions_sim_mod_priv_base_2018.0 select_solutions_sim_mod_priv_plus_2018.0 select_solutions_sim_mod_priv_plus_second_gen_xeon_sp select_solutions_sim_mod_user_base_2018.0 select_solutions_sim_mod_user_plus_2018.0 select_solutions_sim_mod_user_plus_second_gen_xeon_sp services_status sgemm_cpu_performance shell_functionality single std_libraries stream_memory_bandwidth_performance syscfg_settings_uniformity tcl_functionality tools

Node Roles:

The role annotation keyword is used to assign a node to one or more roles. A role describes the intended functionality of a node.

For example, the following nodefile defines 4 nodes: node1 is a head and compute node; node2, node3, and node4 are compute nodes; and node5 is disabled.

node1 # role: head node2 # role: compute node3 # role: login

Valid node role values are described below.

- boot – Provides software imaging / provisioning capabilities.

- compute – Is a compute resource (mutually exclusive with enhanced).

- enhanced – Provides enhanced compute resources, for example, contains additional memory (mutually exclusive with compute).

- external – Provides an external network interface.

- head – Alias for the union of boot, external, job_schedule, login, network_address, and storage.

- job_schedule – Provides resource manager / job scheduling capabilities.

- login – Is an interactive login system.

- network_address – Provides network address to the cluster, for example, DHCP.

- storage – Provides network storage to the cluster, like NFS.

More Information:

- Using Intel Cluster Checker (Part 1)

- Using Intel Cluster Checker (Part 2)

- Using Intel Cluster Checker (Part 3)

User Guide:

Using Intel Cluster Checker (Part 2)

Continuation of Using Intel Cluster Checker (Part 1)

Setup Environment Variables

In you .bashrc, add the following

export CLCK_ROOT=/usr/local/intel/cc2019/clck/2019.10 export CLCK_SHARED_TEMP_DIR=/scratch/tmp

Command Line Options

-c / –config=FILE: Specifies a configuration file. The default configuration file is CLCK_ROOT/etc/clck.xml.

-C / –re-collect-data: Attempts to re-collect any missing or old data for use in analysis. This option only applies to data collection.

-D / –db=FILE: Specifies the location of the database file. This option works in clck-analyze and in clck, but not currently in clck-collect.

-f / –nodefile: Specifies a nodefile containing the list of nodes, one per line. If a nodefile is not specified for clck or clck-collect, a Slurm query will be used to determine the available nodes. If no nodefile is specified for clck-analyze, the nodes already present in the database will be used.

-F / –fwd=FILE: Specifies a framework definition. If a framework definition is not specified, the health framework definition is used. This option can be used multiple times to specify multiple framework definitions. To see a list of available framework definitions, use the command line option -X list.

-h / –help: Displays the help message.

-l / –log-level: Specifies the output level. Recognized values are (in increasing order of verbosity)**: alert, critical, error, warning, notice, info, and debug. The default log level is error.

-M / –mark-snapshot: Takes a snapshot of the data used in an analysis. The string used to mark the data cannot contain the comma character “,” or spaces. This option only applies to analysis.

-n / –node-include: Displays only the specified nodes in the analyzer output.

-o / –logfile: Specifies a file where the results from the run are written. By default, results are written to clck_results.log.

-r / –permutations: Number of permutations of nodes to use when running cluster data providers. By default, one permutation will run. This option only applies to data collection.

-S / –ignore-subclusters: Ignores the subcluster annotations in the nodefile. This option only applies to data collection.

-t / –threshold-too-old: Sets the minimum number of days since collection that will trigger a data too old error. This option only applies to data analysis.

-v / –version: Prints the version and exits.

-X / –FWD_description: Prints a description of the framework definition if available. If the value passed is “list”, then it prints a list of found framework definitions.

-z / –fail-level: Specifies the lowest severity level at which found issues fail. Recognizes values are (in increasing order of severity)**: informational, warning, and critical. The default level at which issues cause a failure is warning.

–sort-asc: Organizes the output in ascending order of the specified field. Recognized values are “id”, “node”, and “severity”.

–sort-desc: Organizes the output in descending order of the specified field. Recognized values are “id”, “node”, and “severity.”

For more information about the available command line options and their uses, run Intel® Cluster Checker with the -h option, or see the man pages.

More Information:

- Using Intel Cluster Checker (Part 1)

- Using Intel Cluster Checker (Part 2)

- Using Intel Cluster Checker (Part 3)

User Guide:

Using Intel Cluster Checker (Part 1)

What is Intel Cluster Checker?

Intel® Cluster Checker provides tools to collect data from the cluster, analysis of the collected data, and provides a clear report of the analysis. Using Intel® Cluster Checker helps to quickly identify issues and improve utilization of resources.

Intel® Cluster Checker verifies the configuration and performance of Linux®-based clusters through analysis of cluster uniformity, performance characteristics, functionality and compliance with Intel® High Performance Computing (HPC) specifications. Data collection tools and analysis provide actionable remedies to identified issues. Intel® Cluster Checker tools and analysis are ideal for use by developers, administrators, architects, and users to easily identify issues within a cluster.

Installing Intel Cluster Checker Using Yum Repository

If you are using Yum Installation, do take a look at Intel Cluster Checker 2019 Installation

If not, you can untar the package if you have the tar.gz

Environment Setup

# source /usr/local/intel/2018u3/bin/compilervars.sh intel64 # source /usr/local/intel/2018u3/mkl/bin/mklvars.sh intel64 # source /usr/local/intel/2018u3/impi/2018.3.222/bin64/mpivars.sh intel64 # source /usr/local/intel/2018u3/parallel_studio_xe_2018/bin/psxevars.sh intel64 # export MPI_ROOT=/usr/local/intel/2018u3/impi/2018.3.222/intel64

# source /usr/local/intel/cc2019/clck/2019.10/bin/clckvars.sh

Create a nodefile and put the hosts in

% vim nodefile

node1 node2 node3

Running Intel Cluster Checker

*Make sure you have SSH login to the nodes without password. See SSH Login without Password

% clck -f nodefile

Examples of run…..

Running Collect ................................................................................................................................................................................................................ Running Analyze SUMMARY Command-line: clck -f nodefile Tests Run: health_base **WARNING**: 3 tests failed to run. Information may be incomplete. See clck_execution_warnings.log for more information. Overall Result: 8 issues found - HARDWARE UNIFORMITY (2), PERFORMANCE (2), SOFTWARE UNIFORMITY (4) ----------------------------------------------------------------------------------------------------------------------------------------- 8 nodes tested: node010, node[003-009] 0 nodes with no issues: 8 nodes with issues: node010, node[003-009] ----------------------------------------------------------------------------------------------------------------------------------------- FUNCTIONALITY No issues detected. HARDWARE UNIFORMITY The following hardware uniformity issues were detected: 1. The InfiniBand PCI physical slot for device 'MT27800 Family [ConnectX-5]' PERFORMANCE The following performance issues were detected: 1.Zombie processes detected. 1 node: node010 2. Processes using high CPU. 7 nodes: node010, node[003,005-009] SOFTWARE UNIFORMITY The following software uniformity issues were detected: 1. The OFED version, 'MLNX_OFED_LINUX-4.5-1.0.1.0 (OFED-4.5-1.0.1)', is not uniform..... 5 nodes: node[003-004,006-007,009] 2. The OFED version, 'MLNX_OFED_LINUX-4.3-1.0.1.0 (OFED-4.3-1.0.1)', is not uniform..... 3 nodes: node010, node[005,008] 3. Environment variables are not uniform across the nodes. ..... 4. Inconsistent Ethernet driver version. ..... See the following files for more information: clck_results.log, clck_execution_warnings.log

Intel MPI Library Troubleshooting

If you are an admin and if you make sure their cluster is set up to work with the Intel® MPI Library, do the following

% clck -f nodefile -F mpi_prereq_admin

If you are non-privileged user and if you make sure their cluster is set up to work with the Intel® MPI Library, do the following

% clck -f nodefile -F mpi_prereq_user

More Information:

- Using Intel Cluster Checker (Part 1)

- Using Intel Cluster Checker (Part 2)

- Using Intel Cluster Checker (Part 3)

User Guide:

MPI related Errors for ANSYS-20.1

One of my users was facing the issues when running ANSYS-20.1 Fluent

Error: eval: unbound variable Error Object: c-flush

... ... ... libibverbs: Warning: no userspace device-specific driver found for /sys/class/infiniband_verbs/uverbs1 libibverbs: Warning: no userspace device-specific driver found for /sys/class/infiniband_verbs/uverbs2 libibverbs: Warning: no userspace device-specific driver found for /sys/class/infiniband_verbs/uverbs1 libibverbs: Warning: no userspace device-specific driver found for /sys/class/infiniband_verbs/uverbs2 libibverbs: Warning: no userspace device-specific driver found for /sys/class/infiniband_verbs/uverbs1 fluent_mpi.20.1.0: Rank 0:110: MPI_Init: ibv_create_qp(left ring) failed fluent_mpi.20.1.0: Rank 0:110: MPI_Init: probably you need to increase pinnable memory in /etc/security/limits.conf fluent_mpi.20.1.0: Rank 0:110: MPI_Init: ibv_ring_createqp() failed fluent_mpi.20.1.0: Rank 0:110: MPI_Init: Can't initialize RDMA device fluent_mpi.20.1.0: Rank 0:110: MPI_Init: Internal Error: Cannot initialize RDMA protocol ... ... ...

There were a few steps that we took to resolve the issues. Do look at the generated logs which will give lots of information. If you are using the PBS-Professional. your fluent script may look like this.

fluent 3ddp -g -t$nprocs -mpi=ibmmpi -cflush -ssh -lsf -cnf=$PBS_NODEFILE -pinfiniband -nmon -i $inputfile >& $PBS_JOBID.log 2>&1

- Do note that “-cflush” and that it should be placed after the -mpi flag. From our observation, if the -cflush is placed before -mpi, it seems to initiate the flush, but another error would be generated.

- Make sure you choose the right mpi parameter.